In this post, I’ll show you how to run the Ray application covered in the previous post as a Kubernetes deployment running on a local Kubernetes cluster deployed using kubeadm. To recall, the application executes a Directed Acyclic Graph (DAG) consisting of reading tabular data about wine characteristics and quality from AWS S3, training a RandomForest model and explaining the model’s predictions using different implementations of the KernelSHAP algorithm and comparing the results.

In this post, you’ll learn:

- Creating a local kubernetes cluster

- Creating a Ray cluster consisting of ray-head and ray-worker Kubernetes deployments on this kubernetes cluster

- Using Kubernetes Horizontal Pod Autoscaling (HPA) to automatically increase the number of worker pods when the CPU utilization exceeds a utilization percentage threshold.

- Use the metrics-server application to monitor pod resource usage and override the metric-resolution argument to the metrics-server container to 15 sec for faster resource tracking

- Lower the HPA downscale period from its default value of 5 min to 15 sec by using a custom kube-controller-manager. This reduces the time to downscale the number of worker pod replicas when the Ray application has stopped running

- Using kubernetes Secrets to pass AWS S3 access tokens to the cluster so that an application running on the cluster can assume the IAM role necessary to execute Boto3 calls on the S3 bucket containing our input data

All the code for this post is here

I will not describe Kubernetes concepts in any great detail below. I will focus on the parts specific to running a Ray application on Kubernetes.

Table of Contents

Setting up a local kubernetes cluster

The first step is to set up a local kubernetes cluster. Minikube and Kubeadm are two popular deployment tools. I decided to use kubeadm to set up my local cluster for the ease of set up. The kubeadm set up guide is pretty straightforward to follow. The tricky part is installing the pod network add-on. There are several options – Calico, Cilium and Flannel to name a few. I tried all three and ran into various arcane problems with Calico and Cilium (which are probably solvable, but stumped a kubernetes non-expert such as me). I achieved success with Flannel and thus the cluster set up script shown below uses Flannel pod add-on.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# The steps shown below are for the most part the same as described in the instructions on how to deploy # a local kubernetes cluster using kubeadm here: # https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/ # turn off swap sudo swapoff -a # reset current state yes| sudo kubeadm reset # We use two custom settings in kubeadm: # 1. initialize kubeadm with a custom setting for horizontal pod autoscaler (hpa) downscale (lower downscale time from # default (5 min) to 15s)) so that pod downscaling occurs faster # 2. Specify a custom pod-network-cidr, which is needed by Flannel sudo kubeadm init --config custom-kube-controller-manager-config.yaml # To see default kubeadm configs, use: # kubeadm config print init-defaults mkdir -p $HOME/.kube yes| sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl apply -f https://github.com/coreos/flannel/raw/master/Documentation/kube-flannel.yml |

You should run these commands one by one rather than running them all at once. Notice that we use a custom configuration while calling kubeadm init. This custom configuration sets the pod-network-cidr to a custom IP address (required by Flannel) and sets the horizontal-pod-autoscaler-downscale-stabilization argument of kube-controller-manager to 15 sec from the default value of 5 min. This setting controls the scale-down time for the kubernetes Horizontal Pod Autoscaler (HPA). Setting a lower value means that worker replicas will be shutdown quicker once the target resource utilization falls below the auto-scaling threshold. More about this when I discuss HPA.

Here’s the custom-kube-controller-manager-config.yaml that is used while initializing kubeadm:

|

1 2 3 4 5 6 7 8 |

apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.18.0 networking: podSubnet: "10.244.0.0/16" # --pod-network-cidr for flannel controllerManager: extraArgs: horizontal-pod-autoscaler-downscale-stabilization: 15s |

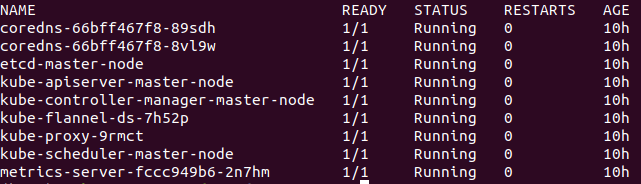

If the kubeadm setup worked correctly, you should see something like the screenshot below when you run this command:

|

1 |

kubectl get pods -n kube-system |

Note that if you previously used another pod network add-on, kubeadm sometimes tries to use a previous pod network state leading to arcane initialization errors. One way around such errors is to remove any Container Networking Interface (CNI) related files for your unused pod network add-on. These files are located in /etc/cni/net.d/ folder. I’m not sure if this is the right way to get around initialization problems due to unused pod network add-ons, but it worked for me.

Finally, By default, your cluster will not schedule Pods on the control-plane node for security reasons. If you want to be able to schedule Pods on the control-plane node, (for example for a single-machine

Kubernetes cluster for development) run:

|

1 |

kubectl taint nodes --all node-role.kubernetes.io/master- |

Set up metrics-server

Metrics Server is a scalable, efficient source of container resource metrics for Kubernetes built-in autoscaling pipelines. Metrics Server collects resource metrics from Kubelets and exposes them in Kubernetes apiserver through Metrics API for use by Horizontal Pod Autoscaler and Vertical Pod Autoscaler. Metrics API can also be accessed by kubectl top, making it easier to debug autoscaling pipelines.

To collect resource metrics more frequently, I set the –metric-resolution argument to the metrics-server container to 15 sec. See Appendix for the full metrics-server deployment that I used.

Ray cluster deployment

Now that we have a local kubernetes cluster , let’s deploy Ray on it. Here’s the Ray deployment yaml.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 |

# Ray head node service, allowing worker pods to discover the head node. apiVersion: v1 kind: Service metadata: namespace: ray name: ray-head spec: ports: # Redis ports. - name: redis-primary port: 6379 targetPort: 6379 - name: redis-shard-0 port: 6380 targetPort: 6380 - name: redis-shard-1 port: 6381 targetPort: 6381 # Ray internal communication ports. - name: object-manager port: 12345 targetPort: 12345 - name: node-manager port: 12346 targetPort: 12346 selector: component: ray-head --- apiVersion: apps/v1 kind: Deployment metadata: namespace: ray name: ray-head spec: # Do not change this - Ray currently only supports one head node per cluster. replicas: 1 selector: matchLabels: component: ray-head type: ray template: metadata: labels: component: ray-head type: ray spec: # If the head node goes down, the entire cluster (including all worker # nodes) will go down as well. If you want Kubernetes to bring up a new # head node in this case, set this to "Always," else set it to "Never." restartPolicy: Always # This volume allocates shared memory for Ray to use for its plasma # object store. If you do not provide this, Ray will fall back to # /tmp which cause slowdowns if is not a shared memory volume. volumes: - name: dshm emptyDir: medium: Memory - name: awstoken secret: secretName: session-token containers: - name: ray-head image: myshap:latest # We want to use a local image, as opposed to from a container repo imagePullPolicy: Never # Pass SSO tokens to the container where they can be accessed as environment variables envFrom: - secretRef: name: session-token # imagePullPolicy: Always command: [ "/bin/bash", "-c", "--"] args: - "ray start --head --node-ip-address=$MY_POD_IP --port=6379 --redis-shard-ports=6380,6381 --num-cpus=$MY_CPU_REQUEST \ --object-manager-port=12345 --node-manager-port=12346 --dashboard-port 8265 --redis-password=password --block" ports: - containerPort: 6379 # Redis port. - containerPort: 6380 # Redis port. - containerPort: 6381 # Redis port. - containerPort: 12345 # Ray internal communication. - containerPort: 12346 # Ray internal communication. - containerPort: 8265 # Ray dashboard # This volume allocates shared memory for Ray to use for its plasma # object store. If you do not provide this, Ray will fall back to # /tmp which cause slowdowns if is not a shared memory volume. volumeMounts: - mountPath: /dev/shm name: dshm - name: awstoken mountPath: "/etc/awstoken" readOnly: true env: - name: MY_POD_IP valueFrom: fieldRef: fieldPath: status.podIP # This is used in the ray start command so that Ray can spawn the # correct number of processes. Omitting this may lead to degraded # performance. - name: MY_CPU_REQUEST valueFrom: resourceFieldRef: resource: requests.cpu resources: limits: cpu: "3000m" requests: cpu: "3000m" memory: "512Mi" --- apiVersion: apps/v1 kind: Deployment metadata: namespace: ray name: ray-worker spec: # Change this to scale the number of worker nodes started in the Ray cluster. replicas: 1 selector: matchLabels: component: ray-worker type: ray template: metadata: labels: component: ray-worker type: ray spec: restartPolicy: Always volumes: - name: dshm emptyDir: medium: Memory containers: - name: ray-worker image: myshap:latest imagePullPolicy: Never # Pass SSO tokens to the container where they can be accessed as environment variables envFrom: - secretRef: name: session-token command: ["/bin/bash", "-c", "--"] args: - "ray start --node-ip-address=$MY_POD_IP --num-cpus=$MY_CPU_REQUEST --address=$RAY_HEAD_SERVICE_HOST:$RAY_HEAD_SERVICE_PORT_REDIS_PRIMARY \ --object-manager-port=12345 --node-manager-port=12346 --redis-password=password --block" ports: - containerPort: 12345 # Ray internal communication. - containerPort: 12346 # Ray internal communication. volumeMounts: - mountPath: /dev/shm name: dshm env: - name: MY_POD_IP valueFrom: fieldRef: fieldPath: status.podIP # This is used in the ray start command so that Ray can spawn the # correct number of processes. Omitting this may lead to degraded # performance. - name: MY_CPU_REQUEST valueFrom: resourceFieldRef: resource: requests.cpu resources: limits: cpu: "3000m" requests: cpu: "3000m" memory: "512Mi" |

This is borrowed from deploying on Kubernetes tutorial in Ray’s documentation. As the tutorial says, a Ray cluster consists of a single head node and a set of worker nodes . This is implemented as:

- A ray-head Kubernetes Service that enables the worker nodes to discover the location of the head node on start up.

- A ray-head Kubernetes Deployment that backs the ray-head Service with a single head node pod (replica).

- A ray-worker Kubernetes Deployment with multiple worker node pods (replicas) that connect to the ray-head pod using the ray-head Service

I’ve modified the configuration in Ray’s documentation by adding kubernetes secret injection and CPU resource limits to both the ray-head and ray-worker deployments (required for HPA to work). Let’s look at each in more detail.

Setting up Kubernetes secrets

The input data used in our application is stored in a S3 bucket with a bucket access policy that allows Get/Put/DeleteObject operations on this bucket. This bucket policy has an IAM role attached which can be assumed to get temporary STS credentials to make Boto calls to access the contents of the S3 bucket. For more information about this set up, see “Reading data from S3” section in my previous post. One way to pass these temporary credentials is to use environment variables. However, Kubernetes offers a better way to manage such information called kubernetes secret. A Secret is an object that contains a small amount of sensitive data such as a password.

The shell script below shows how to use aws sts assume-role command to obtain temporary credentials. These credentials are written to a file which can be passed to a Docker run command using the –env-file argument. The credentials then become part of the environment and accessible from a Python program using os.environ[‘ENV_NAME’]. The credentials are also used to create a kubernetes secret. Notice the use of the generic keyword in the kubectl create secret command. This signifies that we are creating an opaque secret, rather than one of the predefined kubernetes secret types.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

get_session_creds() { # Read IAMRole from file. This IAMRole is attached to the bucket policy and allows read access to the bucket role=$(head -n 1 iamroles.txt) # Get the Access keys for your AWS dev profile. Change this to your profile name PROFILE_NAME=ankur-dev unset AWS_ACCESS_KEY_ID unset AWS_SECRET_ACCESS_KEY # This is important, otherwise the sts assume-role command below can fail unset AWS_SESSION_TOKEN AWS_ACCESS_KEY_ID=$(aws configure get aws_access_key_id --profile=$PROFILE_NAME) AWS_SECRET_ACCESS_KEY=$(aws configure get aws_secret_access_key --profile=$PROFILE_NAME) export AWS_ACCESS_KEY_ID export AWS_SECRET_ACCESS_KEY # echo $AWS_SECRET_ACCESS_KEY # echo $AWS_ACCESS_KEY_ID # see: https://github.com/rik2803/aws-sts-assumerole/blob/master/assumerole # Get temporary creds that can be used to assume this role creds=$(aws sts assume-role --role-arn ${role} --role-session-name AWSCLI-Session) AWS_ACCESS_KEY_ID_SESS=$(echo ${creds} | jq --raw-output ".Credentials[\"AccessKeyId\"]") AWS_SECRET_ACCESS_KEY_SESS=$(echo ${creds} | jq --raw-output ".Credentials[\"SecretAccessKey\"]") AWS_SESSION_TOKEN=$(echo ${creds} | jq --raw-output ".Credentials[\"SessionToken\"]") # Write the creds to a file. This file can be passed as argument to a Docker run command using the --env-file option env_file='./env.list' if [ -e $env_file ]; then echo "AWS_ACCESS_KEY_ID_SESS="$AWS_ACCESS_KEY_ID_SESS >$env_file echo "AWS_SECRET_ACCESS_KEY_SESS="$AWS_SECRET_ACCESS_KEY_SESS >>$env_file echo "AWS_SESSION_TOKEN="$AWS_SESSION_TOKEN >>$env_file fi # Create kubernetes secret to pass SSO tokens to the pod # first delete any existing secrets kubectl delete secret session-token -n ray # now create a secret. The use of generic means an opaque secret is created kubectl create secret generic session-token -n ray --from-literal=AWS_ACCESS_KEY_ID_SESS=$AWS_ACCESS_KEY_ID_SESS \ --from-literal=AWS_SECRET_ACCESS_KEY_SESS=$AWS_SECRET_ACCESS_KEY_SESS \ --from-literal=AWS_SESSION_TOKEN=$AWS_SESSION_TOKEN # to see if secret was created: #kubectl edit secret session-token -n ray # or # kubectl get secret/session-token -n ray -o jsonpath='{.data}' } |

To use this script, you must have a iamroles.txt file containing your IAM role ARN.

To use a Secret, a Pod needs to reference the Secret. A Secret can be used with a Pod in three ways:

- As files in a volume mounted on one or more of its containers.

- As container environment variable.

- By the kubelet when pulling images for the Pod.

Here, we’ll use the first and second method in ray-head and the second method in ray-worker.

In the ray-head deployment, secrets are being injected in two ways:

- Using envFrom to define all of the Secret’s data as container environment variables. The key from the Secret becomes the environment variable name in the Pod.

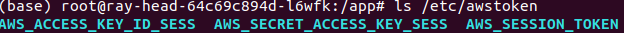

- Using volume mounts. The secret data is exposed to Containers in the Pod through a Volume.

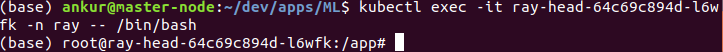

When you deploy the cluster, you can exec into the running head pod to verify that the secrets have been injected as expected:

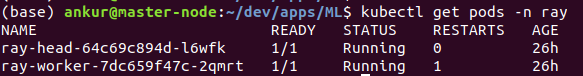

- Get pods running in the Ray namespace

- exec into the head pod:

- Use the command below to print the value of AWS_ACCESS_KEY_ID_SESS

1(echo "import os"; echo "print(os.environ['AWS_ACCESS_KEY_ID_SESS'])") | python

verify that the value matches the output of the sts assume-role command - Now lets check the content of the /etc/awstoken directory. We should see the AWS credentials used to create the kubernetes secret.

Verify that the contents of these files matches the output of sts assume-role command

You only need one of these methods to inject secrets. The worker pods only use the environment variable method.

Kubernetes Horizontal Pod Autoscaler

The Horizontal Pod Autoscaler (HPA) automatically scales the number of Pods in a replication controller, deployment or replica set based on observed CPU utilization or a custom application metric. For details about the algorithm used by the Autoscaler, see HPA documentation. In our HPA configuration, we specify the deployment subject to autoscaling (ray-worker), the minimum and maximum number of replicas and the target CPU utilization percentage. When the observed CPU utilization percentage exceeds the target, autoscaling will kick in launching new ray-worker pods. These pods will automatically join the ray-head deployment, ready to take on work.

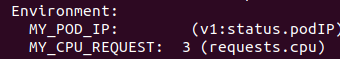

In the ray-cluster configuration YAML, notice that I’ve added CPU resource limits to both the ray-head and ray-worker deployments. This is necessary for the HPA to work because new worker replicas are launched when pod CPU utilization percentage exceeds a threshold. For utilization percentage to be calculated, we need a resource limit. The requests.cpu field is also used to set the value of the num-cpus argument passed to the ray-head and ray-worker deployments through the MY_CPU_REQUEST variable.

You can verify the values of the environment variables by using kubectl describe pods <pod-name>

Note that requests.cpu is specified in milli-cpus

let’s now create a HPA that automatically launches additional worker nodes when worker pod CPU resource utilization percentage exceeds a threshold. Then we’ll run the Python application and check for the number of worker replicas.

Here’s the HPA configuration yaml (called ray-autoscaler.yaml).

|

1 2 3 4 5 6 7 8 9 10 11 12 |

apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: shap-autoscaler spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: ray-worker minReplicas: 1 maxReplicas: 3 targetCPUUtilizationPercentage: 30 |

To create HPA, use the following commands:

|

1 2 3 |

# Create horizontal Autoscaler kubectl delete -f ray-autoscaler.yaml -n ray kubectl create -f ray-autoscaler.yaml -n ray |

Note that the HPA is created in the same namespace as the rest of the Ray deployment.

To verify that the HPA has been successfully created, you can use:

|

1 2 3 4 |

# to get/describe HPAs in the ray namespace kubectl get hpa -n ray # to get info about a specific HPA kubectl get ray-autoscaler.yaml -n ray |

Having created the HPA, we can now run our Python application:

|

1 2 3 4 5 6 7 8 |

# Run our program # --local keyword instructs Ray to run this program on an existing cluster, # rather than create a new one # --use-s3 tells the program to load the input data to train the RandomForest model from S3 rather than from a local # file. # --num_rows is the number of rows for which shap values are calculated. Increasing this number will make the program # run for longer kubectl exec deployments/ray-head -n ray -- python source/kernel_shap_test_ray.py --local=0 --use_s3=1 --num_rows=32 |

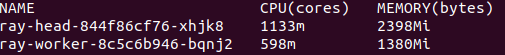

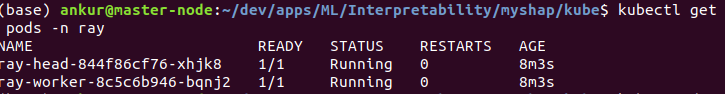

Let’s verify that our pods are running (by using kubectl -get pods -n ray)

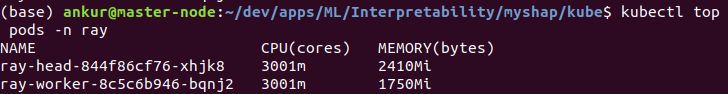

After a few seconds, the CPU utilization has reached limits.cpu.

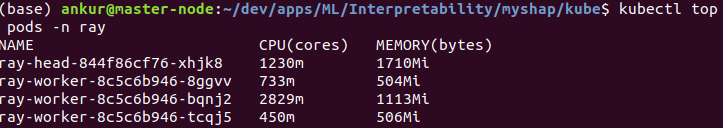

At this point, HPA will launch two additional worker replicas, increasing the number of worker replicas to 3 (max allowed in the HPA settings)

Once the shapley value calculations have finished, check the number of pods. You’ll see the number of worker replicas scale down to 1 after a few seconds. Recall we set horizontal-pod-autoscaler-downscale-stabilization argument of kube-controller-manager to 15 sec while initializing kubeadm. Doing so lowers the down scale time so that the worker replicas are terminated faster.

Conclusion

In this post you learnt how to use kubeadm to set up a local kubernetes cluster, deploy a Ray cluster on this kubernetes cluster, passing AWS STS credentials using kubernetes Secret and launching new worker replicas using kubernetes HPA. The script used to execute the steps shown above, starting from setting up metrics-server is here:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

# Next steps: install metrics-server kubectl create -f metrics-server/components.yaml # Note the --metric-resolution=15s setting # Now you can use kubectl top pods to see CPU/Memory utilization for a pod # create a namespace for ray kubectl apply -f ray-namespace.yaml # run aws configure # create secret. Note: must have run aws configure --profile <profile_name> before this step . ../get_session_creds.sh # need to go one directory up because iamroles.txt file needed by get_session_creds script is in top level directory cd .. get_session_creds cd kube # create our deployment kubectl apply -f ray-cluster.yaml -n ray # give some time for the pods to come up sleep 3s # Create horizontal Autoscaler kubectl delete -f ray-autoscaler.yaml -n ray kubectl create -f ray-autoscaler.yaml -n ray # to get/describe HPAs in the ray namespace kubectl get hpa -n ray # to get info about a specific HPA # kubectl get ray-autoscaler.yaml -n ray # Run our program # --local keyword instructs Ray to run this program on an existing cluster, # rather than create a new one # --use-s3 tells the program to load the input data to train the RandomForest model from S3 rather than from a local # file. # num_rows is the number of rows for which shap values are calculated. Increasing this number will make the program # run for longer kubectl exec deployments/ray-head -n ray -- python source/kernel_shap_test_ray.py --local=0 --use_s3=1 --num_rows=32 |

Appendix

Here’s the metrics-server deployment I used. You can cut and paste this into a yaml file and then deploy it using kubectl apply -f file_name.yaml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 |

--- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:aggregated-metrics-reader labels: rbac.authorization.k8s.io/aggregate-to-view: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-admin: "true" rules: - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: apiregistration.k8s.io/v1beta1 kind: APIService metadata: name: v1beta1.metrics.k8s.io spec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100 --- apiVersion: v1 kind: ServiceAccount metadata: name: metrics-server namespace: kube-system --- apiVersion: apps/v1 kind: Deployment metadata: name: metrics-server namespace: kube-system labels: k8s-app: metrics-server spec: selector: matchLabels: k8s-app: metrics-server template: metadata: name: metrics-server labels: k8s-app: metrics-server spec: serviceAccountName: metrics-server volumes: # mount in tmp so we can safely use from-scratch images and/or read-only containers - name: tmp-dir emptyDir: {} containers: - name: metrics-server image: k8s.gcr.io/metrics-server/metrics-server:v0.3.7 imagePullPolicy: IfNotPresent args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP - --metric-resolution=15s ports: - name: main-port containerPort: 4443 protocol: TCP securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - name: tmp-dir mountPath: /tmp nodeSelector: kubernetes.io/os: linux --- apiVersion: v1 kind: Service metadata: name: metrics-server namespace: kube-system labels: kubernetes.io/name: "Metrics-server" kubernetes.io/cluster-service: "true" spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: main-port --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system |

Leave a Reply