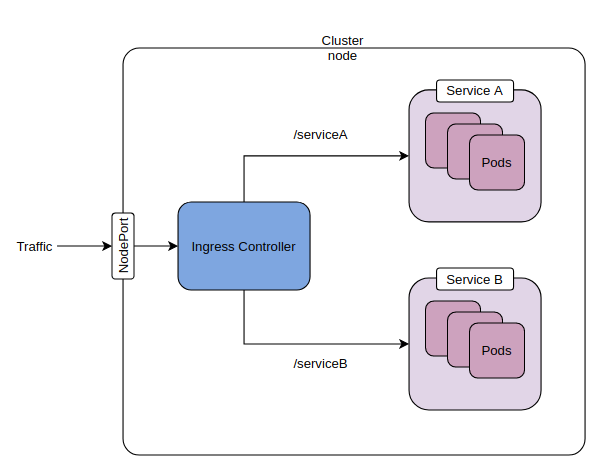

In this post, I’ll show you how to set up an ingress controller on a local kubernetes cluster to access a service that exposes a local flask server. The post is not meant to cover kubernetes concepts such as pods, services or ingress controllers or writing and containerizing a simple flask server. There is a lot of information about these concepts in kubernetes documentation and various blog posts. In this post, I’ll focus on some tips on how to troubleshoot when you try to access your flask server endpoint through a ingress controller and get a 404 error.

Note that there are two nginx-kubernetes ingress controllers:

- ingress-nginx: supported by kubernetes community

- kubernetes-ingress: seems to be supported by nginx.

This doc outlines the differences. I’m using the first one that seems far more popular.

The scripts and yaml files used in this post are in this repo. We’ll first set up a single node kubernetes cluster on an Ubuntu workstation, and then configure some simple pods and services which we’ll try to access via an ingress controller. Then, we’ll do the same with a simple flask server. Along the way, I’ll show you some troubleshooting steps to help debug 404 messages from the ingress controller and the flask server.

Setting up a local kubernetes cluster

Here’s a script to set up a single node kubernetes cluster on an Ubuntu workstation, using Kubeadm.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

# The steps shown below are for the most part the same as described in the instructions on how to deploy # a local kubernetes cluster using kubeadm here: # https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/ # turn off swap sudo swapoff -a # reset current state yes | sudo kubeadm reset # We use two custom settings in kubeadm: # 1. initialize kubeadm with a custom setting for horizontal pod autoscaler (hpa) downscale (lower downscale time from # default (5 min) to 15s)) so that pod downscaling occurs faster # 2. Specify a custom pod-network-cidr, which is needed by Flannel sudo kubeadm init --config custom-kube-controller-manager-config.yaml # To see default kubeadm configs, use: # kubeadm config print init-defaults mkdir -p $HOME/.kube yes | sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl apply -f https://github.com/coreos/flannel/raw/master/Documentation/kube-flannel.yml # linux foundation kubernetes tutorial recommends calico networking plugin # kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml # to get rid of old cni plugins, delete cni files in /etc/cni/net.d/ # IMPORTANT: Do this for worker nodes also: # sudo rm -rf /etc/cni/net.d # sudo rm -rf /var/lib/cni # Wait for all pods to come up sleep 5s # verify all kube-system pods are running kubectl get pods -n kube-system # By default, your cluster will not schedule Pods on the control-plane node for security reasons. # If you want to be able to schedule Pods on the control-plane node, for example for a single-machine # Kubernetes cluster for development, run: kubectl taint nodes --all node-role.kubernetes.io/master- # deploy nginx ingress controller (in the ingress-nginx namespace) # See: https://github.com/kubernetes/ingress-nginx/blob/master/docs/deploy/index.md#bare-metal kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.46.0/deploy/static/provider/baremetal/deploy.yaml # To check if all is ok: # kubectl get all -n ingress-nginx # to see token list kubeadm token list # To enable kubectl autocomplete. Must start a new shell after this command # echo 'source <(kubectl completion bash)' >>~/.bashrc alias k=kubectl source <(kubectl completion bash) # completion will save a lot of time and avoid typo source <(kubectl completion bash | sed 's/kubectl/k/g') # so completion works with the alias "k" ############ CLUSTER SET UP COMPLETE ##################### |

You can ignore the part that sets a custom setting for the horizontal pod autoscaler (hpa). That is not relevant to this post. Note that we set up the nginx ingress controller over a NodePort service, which is the simplest and easiest to understand option, specially if you are not using a cloud provider. See this installation guide for details.

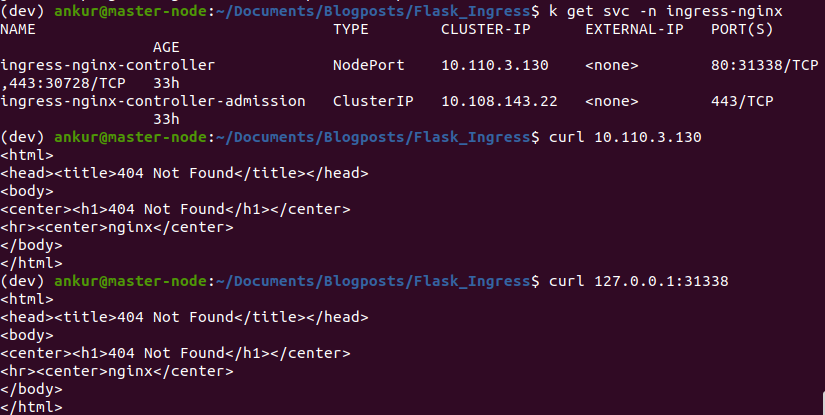

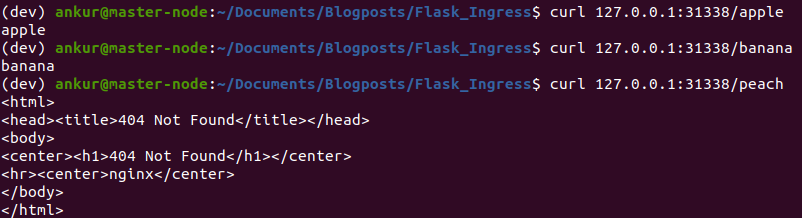

Accessing some simple services through the ingress controller

After completing the set up shown above, verify that ingress controller service is running in the ingress-nginx namespace. As shown in the screenshot below, you can access this service by curling the IP address of the ingress-nginx-controller service or using the node port. Doing either should show a “404 Not Found” page. If this doesn’t work, there is some issue with your set up and none of what follows will work either.

If you are curious about the configuration of the nginx server backing the nginx ingress controller, you can exec into the pod corresponding to the ingress-nginx-controller, as shown below. See this for more info.

![]()

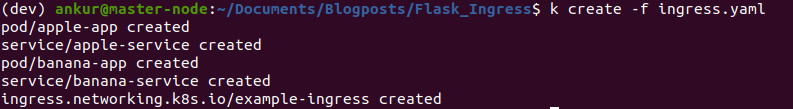

Now, we’ll set up a couple of pods and expose them through services. Then, we’ll create an ingress resource to specify path based routing to those services. The screenshots below show this in action. See ingress.yaml in the github repo for configuration details.

All of this should work out of the box.

Creating a containerized flask server

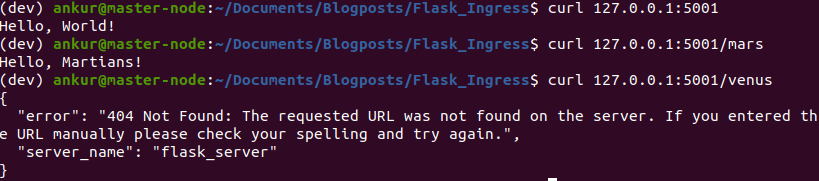

Now, we’ll create a very simple flask server that exposes a couple of endpoints and create an image that runs it. We will set up a custom 404 error message, so we can distinguish our server 404 responses from those of nginx. Then, we’ll create a kubernetes pod using the server image and a service that exposes the server.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

from flask import Flask, render_template, jsonify def page_not_found(e): return jsonify(server_name="flask_server", error=str(e)), 404 app = Flask(__name__) app.register_error_handler(404, page_not_found) @app.route('/') def hello_world(): return 'Hello, World!' @app.route('/mars') def hello_mars(): return 'Hello, Martians!' if __name__ == '__main__': # host must be 0.0.0.0, otherwise won't work from inside docker container app.run(host='0.0.0.0', debug=True, use_reloader=False, port=5001) |

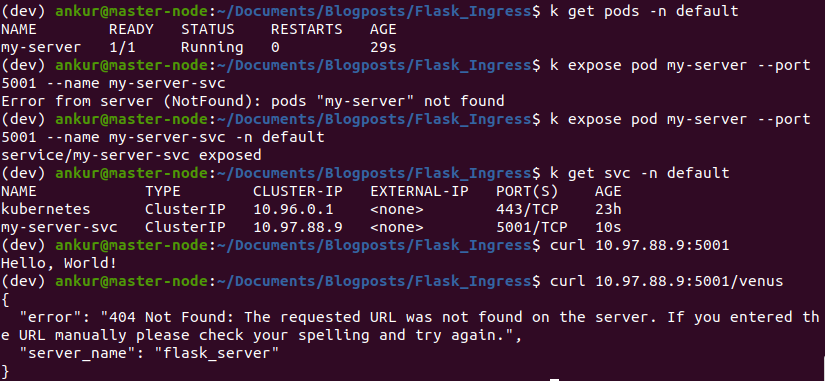

Next, we create a docker image and a pod that runs that image. We’ll also expose the pod through a service and verify that we can curl it. See the Dockerfile and server_pod.yaml in the github repo for this post.

Next, we’ll add a route for our service in the ingress. This is done by adding the following section to the ingress.yaml. This is already included in the ingress.yaml included in the github repo.

|

1 2 3 4 5 6 7 8 9 |

- http: paths: - path: /my-server pathType: Prefix backend: service: name: my-server-svc port: number: 5001 |

We intend traffic directed at 127.0.0.1/31338/my-server to be sent to my-server-svc service. Kubernetes DNS will automatically look up the service IP address for you. If the service is in a different namespace, you’ll need to use the fully qualified name of the service.

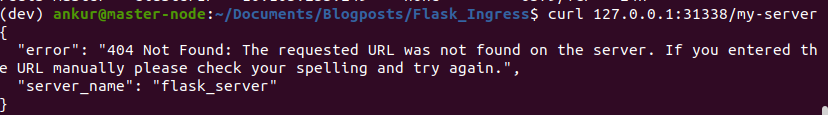

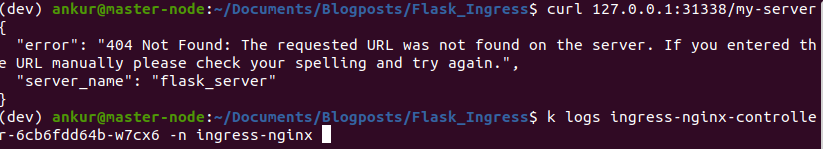

Delete and recreate the ingress resources and curl the endpoint. You can use kubectl delete -f ingress.yaml –force to delete all resources specified in the yaml. We get a 404 message, but we can tell that it is coming from our server.

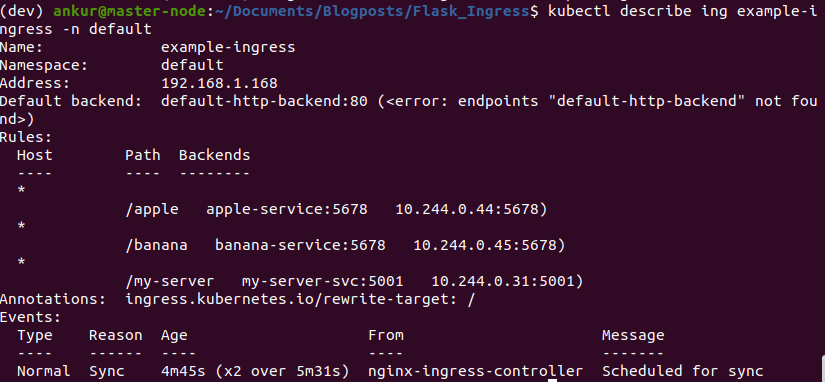

If we run kubectl describe ingress on our ingress resource, we can see that it added a configuration for our service and was able to look up the correct service IP address.

If you delete and recreate the my-server-svc service, kubernetes will assign it a new IP. However, the service IP in the ingress configuration will update automatically. This is an important benefit of using ingress – you can access your services using a hostname or path, rather than worry about dynamic IP addresses.

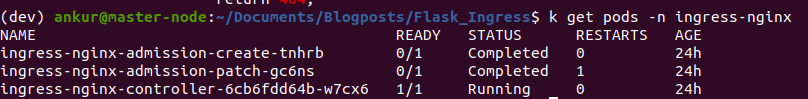

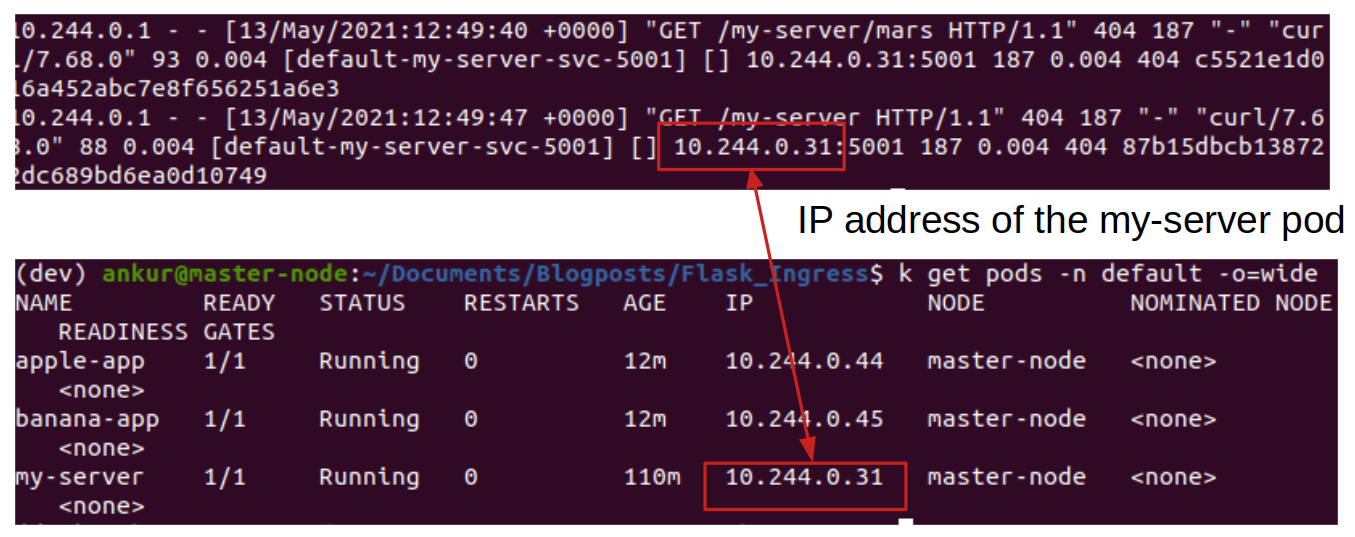

Next, lets look at the logs of the ingress-nginx-controller pod. You can get the name of the pod running the ingress controller by using the kubectl get pods -n ingress-nginx command.

The logs give us a clue about why we are getting a 404 error from our server. Our server expects to receive GET requests on “/” and “/mars”, but the ingress controller is appending the path prefix. To solve this, we need to rewrite /my-server to /, /my-server/mars to /mars etc. This can be accomplished using “rewrite annotations“. Modify the ingress rule as follows (see ingress_final.yaml).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

Add this annotation: metadata: name: example-ingress annotations: # This is needed! nginx.ingress.kubernetes.io/rewrite-target: /$2 And modify the Prefix path as follows: - http: paths: - path: /my-server(/|$)(.*) pathType: Prefix backend: service: name: my-server-svc port: number: 5001 |

Using this annotation, any characters captured by (.*) will be assigned to the placeholder $2, which is then used as a parameter in the rewrite-target annotation. See this for more info about rewrite rules.

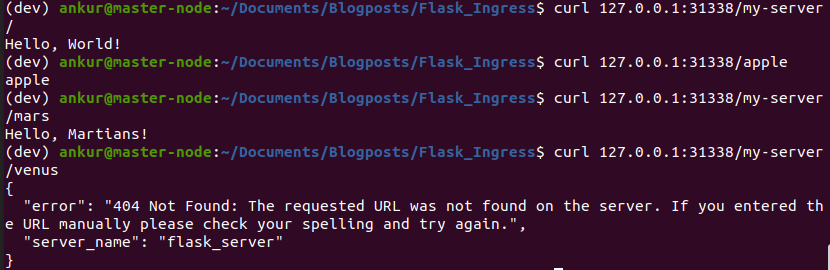

Delete and recreate the ingress. Now, viola! everything works 🙂

Hope you found this information helpful. Please leave a comment if you did!

Thanks, Ankur, tried it; worked fine for me.

I have been doing a lot of work w Tornado and Flask micro-services, while working on Athena, JP’s Python based eco-system for trading and risk pipelines, so I am very much familiar with the setup, but not what you described here.